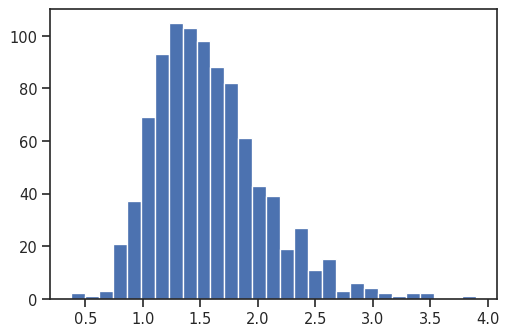

Distribution of TAD strength scores

Using some advanced techniques, it’s possible to calculate an arbitrary score for each snippet that contributed to the final pileup, and save those values within the pileup dataframe. This can be used to investigate whether the contribution features are all similar, or only some outliers cause enrichment.

Here as an example, we can calculate and store the TAD strength for each TAD that was averaged.

# If you are a developer, you may want to reload the packages on a fly.

# Jupyter has a magic for this particular purpose:

%load_ext autoreload

%autoreload 2

# import standard python libraries

import matplotlib as mpl

%matplotlib inline

mpl.rcParams['figure.dpi'] = 96

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sns

# import libraries for biological data analysis

from coolpuppy import coolpup

from coolpuppy.lib.numutils import get_domain_score

from coolpuppy.lib.puputils import accumulate_values

from coolpuppy import plotpup

import cooler

import bioframe

import cooltools

from cooltools.lib import io

from cooltools import insulation, expected_cis

from cooltools.lib import plotting

# Downloading test data for pileups

# cache = True will doanload the data only if it was not previously downloaded

# data_dir="./" will force download to the current directory

cool_file = cooltools.download_data("HFF_MicroC", cache=True, data_dir='./')

# Open cool file with Micro-C data:

clr = cooler.Cooler(f'{cool_file}::/resolutions/10000')

# Set up selected data resolution:

resolution = 10000

# Use bioframe to fetch the genomic features from the UCSC.

hg38_chromsizes = bioframe.fetch_chromsizes('hg38')

hg38_cens = bioframe.fetch_centromeres('hg38')

hg38_arms = bioframe.make_chromarms(hg38_chromsizes, hg38_cens)

# Select only chromosomes that are present in the cooler.

# This step is typically not required! we call it only because the test data are reduced.

hg38_arms = hg38_arms.set_index("chrom").loc[clr.chromnames].reset_index()

# call this to automaticly assign names to chromosomal arms:

hg38_arms = bioframe.make_viewframe(hg38_arms)

# Calculate expected interactions for chromosome arms

expected = expected_cis(

clr,

ignore_diags=0,

view_df=hg38_arms,

chunksize=1000000)

First we need to generate coordinates of TADs. It’s quite simple using cooltools.insulation: we get coordinates of strongly insulating regions, which likely correspond to TAD boundaries. Then we just need to combine consecutive boundaries, filter out super long domains, and we have a list of TAD coordiantes.

insul_df = insulation(clr, window_bp=[100000], view_df=hg38_arms, nproc=4,)

insul_df

| chrom | start | end | region | is_bad_bin | log2_insulation_score_100000 | n_valid_pixels_100000 | boundary_strength_100000 | is_boundary_100000 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | chr2 | 0 | 10000 | chr2_p | True | NaN | 0.0 | NaN | False |

| 1 | chr2 | 10000 | 20000 | chr2_p | False | 0.692051 | 8.0 | NaN | False |

| 2 | chr2 | 20000 | 30000 | chr2_p | False | 0.760561 | 17.0 | NaN | False |

| 3 | chr2 | 30000 | 40000 | chr2_p | False | 0.766698 | 27.0 | NaN | False |

| 4 | chr2 | 40000 | 50000 | chr2_p | False | 0.674906 | 37.0 | NaN | False |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 32541 | chr17 | 83210000 | 83220000 | chr17_q | True | NaN | 0.0 | NaN | False |

| 32542 | chr17 | 83220000 | 83230000 | chr17_q | True | NaN | 0.0 | NaN | False |

| 32543 | chr17 | 83230000 | 83240000 | chr17_q | True | NaN | 0.0 | NaN | False |

| 32544 | chr17 | 83240000 | 83250000 | chr17_q | True | NaN | 0.0 | NaN | False |

| 32545 | chr17 | 83250000 | 83257441 | chr17_q | True | NaN | 0.0 | NaN | False |

32552 rows × 9 columns

# A useful function to combine insulation score valleys into TADs and filter out very long "TADs"

def make_tads(insul_df, maxlen=1_500_000):

tads = (

insul_df.groupby("chrom")

.apply(

lambda x: pd.concat(

[x[:-1].reset_index(drop=True), x[1:].reset_index(drop=True)],

axis=1,

ignore_index=True,

)

)

.reset_index(drop=True)

)

tads.columns = [["chrom1", "start1", "end1", "chrom2", "start2", "end2"]]

tads.columns = tads.columns.get_level_values(0)

tads = tads[

(tads["start2"] - tads["start1"]) <= maxlen

].reset_index(drop=True)

tads["start"] = (tads["start1"] + tads["end1"]) // 2

tads["end"] = (tads["start2"] + tads["end2"]) // 2

tads = tads[["chrom1", "start", "end"]]

tads.columns = ['chrom', 'start', 'end']

return tads

Getting TAD coordinates:

tads = make_tads(insul_df[insul_df['is_boundary_100000']][['chrom', 'start', 'end']])

Define a helper function to store domain scores within each snippet:

def add_domain_score(snippet):

snippet['domain_score'] = get_domain_score(snippet['data']) # Calculates domain score for each snippet according to Flyamer et al., 2017

return snippet

Another helper function to save domain scores when combining snippets into a pileup:

def extra_sum_func(dict1, dict2):

return accumulate_values(dict1, dict2, 'domain_score')

Here we use the low-level coolpuppy API, including the helper functions we defined above:

cc = coolpup.CoordCreator(tads, resolution=10000, features_format='bed', local=True, rescale_flank=1)

pu = coolpup.PileUpper(clr, cc, expected=expected, view_df=hg38_arms, ignore_diags=0, rescale_size=99, rescale=True)

pup = pu.pileupsWithControl(postprocess_func=add_domain_score, # Any function can be applied to each snippet before they are averaged in the postprocess_func

extra_sum_funcs={'domain_score': extra_sum_func}) # If additional values produced by postprocess_func need to be saved,

# it can be done using the extra_sum_funcs dictionary, which defines how to combine them.

INFO:coolpuppy:Rescaling with rescale_flank = 1 to 99x99 pixels

INFO:coolpuppy:('chr2_p', 'chr2_p'): 238

INFO:coolpuppy:('chr2_q', 'chr2_q'): 412

INFO:coolpuppy:('chr17_p', 'chr17_p'): 75

INFO:coolpuppy:('chr17_q', 'chr17_q'): 213

INFO:coolpuppy:Total number of piled up windows: 238

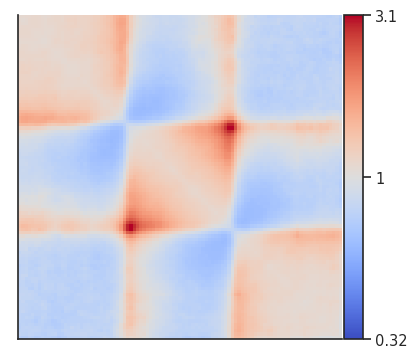

This is the pileup that we got from the previous step:

plotpup.plot(pup,

score=False,

height=5)

<seaborn.axisgrid.FacetGrid at 0x7ff35843abb0>

And here are the domain scores for the first 10 TADs that went into the analysis!

pup.loc[0, 'domain_score'][:10]

[1.3514487857326527,

1.0014944851906267,

1.101405324789513,

0.8571123334522476,

2.843350393430375,

1.9848390933637012,

0.7560571349817319,

1.1659901426919959,

0.9223474219342431,

1.0465908705072648]

Their distribution as a histogram:

plt.hist(pup.loc[0, 'domain_score'], bins='auto');